Artificial Us

2024

Unequal Access, Unequal Representation

HOW IT WORKS?

Embodiment of the System

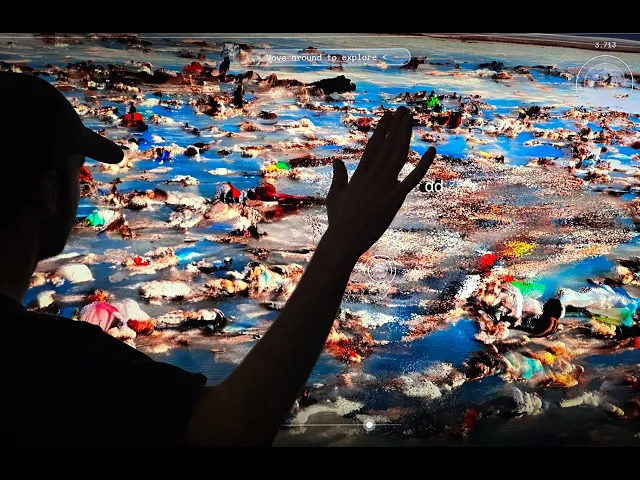

When the installation comes to life, the audience becomes part of the loop. Body tracking captures each gesture as light, depth, and image shift in real time. Every movement alters the AI-generated landscapes, transforming observation into participation. In this space, the body reflects the same cycles of extraction and transformation that sustain artificial intelligence. Data, motion, and meaning constantly feed back into one another.

WHO IS US IN ARTIFICIAL US?

Training the mirror

Artificial Us was built by Matías Piña from Chile and Tiago Aragona from Argentina. When we asked default AI models to imagine the places we call home, they failed. Our mountains, deserts, and people appeared distorted or erased. By fine-tuning models with our own photographs and archives, we reclaimed that image. The result is not a perfect mirror, but a closer one, built by our data, our land, and our stories.

FROM MINERALS TO DATA

The logic of extraction has not changed, only its form.

What began with silver and copper now continues through lithium and data. Generative AI depends on both the physical resources of the Global South and the invisible labor to train its datasets. The same regions that power the world’s devices often remain unseen within the systems they sustain.